The Ultimate Guide to Automated Security Validation (ASV) in 2025

LAST UPDATED ON JANUARY 28, 2026

Definition of Automated Security Validation

Automated Security Validation (ASV) is a proactive cybersecurity practice in which an organization deploys automated software solutions to continuously discover and validate its security controls through safe stress-testing. ASV can both simulate and emulate real-world attack scenarios to identify exploitable vulnerabilities and weaknesses specific to an organization’s environment, while also considering the global threat landscape.

By automating this validation process, ASV ensures that organizations maintain an up-to-date understanding of their security posture, prioritize high-risk threats, and strengthen defenses against evolving adversary tactics.

How Automated Security Validation Works

Automated Security Validation operates by executing adversary-grade behavior in a safe, controlled environment, going beyond theoretical checks. By using the tactics, techniques, and procedures (TTPs) of real-world threat actors and malware campaigns observed in the wild, ASV reveals how defenses perform under realistic pressure. This provides an accurate view of vulnerabilities and security gaps, rather than a false sense of security.

|

ASV solutions continuously interact with the live environment, performing the equivalent of vulnerability assessments, red teaming, and penetration testing at contextual-aware machine speed. This ensures every action is backed by actionable evidence, revealing which vulnerabilities are exploitable, which compensating controls block attacks, whether attacks are alerted when not blocked, and where defenses fail silently. |

Unlike traditional security testing methods, which provide a static snapshot of security at a specific moment in time, automated security validation software works continuously, adapting in real-time to dynamic changes in an organization’s environment, including CVEs, security control configurations, user rights and group permission changes, security policy updates, and more.

This ensures continuous coverage, maintaining both breadth and immediacy, while eliminating the gaps between periodic tests and addressing blind spots.

Key Components, and Power-Houses of Automated Security Validation (ASV)

|

TL-DR: Adversarial Exposure Validation (AEV) is a key enabler of Automated Security Validation (ASV), providing continuous, automated testing of implemented security measures to verify the exploitability of vulnerabilities in real-world conditions.

Gartner defines AEV as a process that simulates attack scenarios to determine whether a theoretical exposure, such as an unpatched system, presents a real, exploitable risk. |

AEV consists of Breach and Attack Simulation (BAS) and Automated Penetration Testing software solutions that continuously validate the exploitability of vulnerabilities across an organization’s security stack, assessing whether the exploitation of identified vulnerabilities can be prevented or, if not, detected by organizational defenses.

This approach provides data-backed proof of exploitability within the context of the organization’s unique IT environment, deprioritizing remediation and patching efforts for non-exploitable (theoretical) issues, and focusing on critical attack vectors and paths that can bypass security measures and pose significant business risks.

This type of validated filtering can dramatically decrease in the patching, and remediation backlog that requires immediate attention.

Here is an example context.

- A database flaw (with high CVSS score) with no network access is deprioritized.

- A CVE with a 9.8 CVSS score, blocked by a WAF, deprioritized.

- Low-severity issues that, when chained together, grant access to domain admin accounts are flagged for immediate attention, prioritized.

This continuous and automated security validation process ensures that defenses are regularly tested against evolving threats, such as ransomware, phishing, and APTs, within a Continuous Threat Exposure Management (CTEM) framework, providing a dynamic, up-to-date view of an organization’s security posture.

Automating Security Validation with Adversarial Exposure Validation Tools

There are two main Adversarial Exposure Validation tools to deliver attacker-centric automated security validation: BAS, and Automated Penetration Testing.

- Breach and Attack Simulation (BAS): This technology continuously simulates adversary tactics like ransomware payloads, lateral movement, and data exfiltration, ensuring security controls perform as intended. Mapped to the MITRE ATT&CK framework, BAS offers comprehensive and ongoing validation, making it far more effective than one-time exercises.

- Automated Penetration Testing: A step beyond traditional pentesting, this solution automates the chaining of vulnerabilities to simulate real-world attack paths. It’s particularly effective in exposing complex attack vectors, such as Kerberoasting or privilege escalation through mismanaged identities. Unlike annual pentests, Automated Penetration Testing allows teams to run these tests continuously, adapting to emerging threats and evolving attack techniques.

A Real-Life Case: Adversarial Exposure Validation (AEV) with BAS in Action

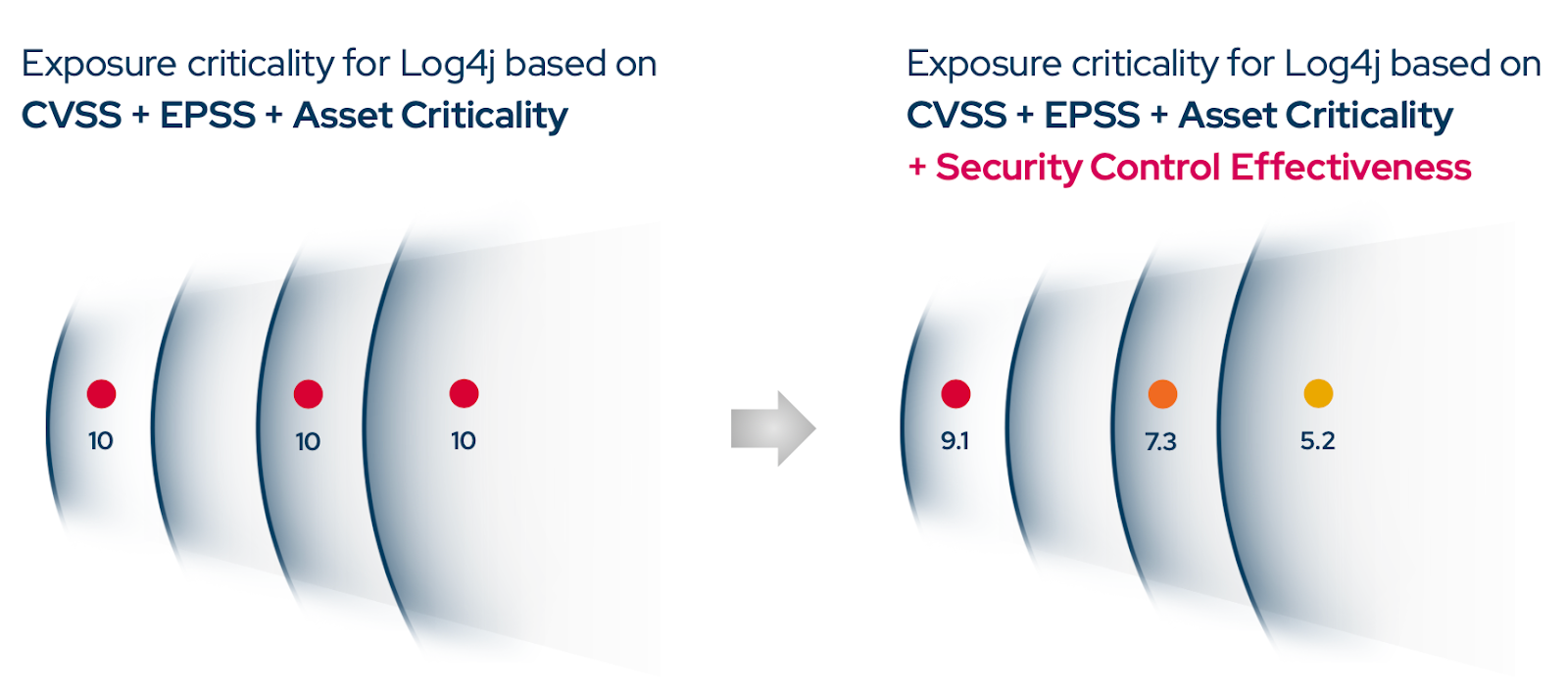

Take the Log4j vulnerability as an example. When it first emerged, traditional scanners flagged it across the board, assigning it a CVSS score of 10.0 (Critical), marking it as highly exploitable with EPSS, and showing it prevalent across asset inventories.

This is where BAS, a core technology in Adversarial Exposure Validation, changes the game. BAS doesn’t just highlight vulnerabilities; it validates their exploitability in context.

In the case of Log4j, BAS allows teams to assess that not every instance requires immediate action. Here’s how the severity score decreased at each step in context:

- CVSS: 10.0 (Critical)

- EPSS: 9.1

- Asset Criticality: 7.3

- Security Control Effectiveness (whether compensating controls, like a WAF, blocked the exploitation attempt): 5.2

At each stage, the severity score dropped incrementally, from 10.0 to 9.1, then to 7.3, and finally to 5.2, based on the organization’s unique environment. Instead of triggering an immediate, all-hands response, the risk became a manageable issue that could be addressed within regular patch cycles.

(Disclaimer: This calculation was performed using the Picus Exposure Score module, which is native to the Picus Platform.)

Figure 1. Re-assessing Exposure Criticality of Log4j with Picus Platform

On the flip side, BAS can uncover more severe threats. A low-priority misconfiguration in a SaaS app could chain into data exfiltration, escalating it from "medium" to "urgent." By simulating real-world attack paths, BAS ensures that resources are directed to the exposures that truly matter.

Remediation Validation (Closing the Loop)

This section is rather a disclaimer. A critical yet often overlooked step in automated security validation is remediation validation, which ensures that fixes and patches actually resolve the exposure.

After vulnerabilities are addressed, ASV tools rerun attack simulations to confirm the risk is mitigated. This "closed-loop" validation provides immediate feedback, especially in large enterprises, where partial fixes or miscommunication can occur. ASV platforms also prioritize remediation by offering actionable recommendations, guiding teams on which issues to address first. This ensures confidence that vulnerabilities are fully eliminated, improving the overall security posture.

Automated Security Validation (ASV) vs. Traditional Security Testing Approaches

Automated Security Validation represents an evolution of security testing, and it differs significantly from traditional approaches like periodic penetration tests, vulnerability scanning, and red team exercises.

Below is a comparative analysis highlighting differences in scope, frequency, level of automation, and value provided.

Manual Penetration Testing vs. Automated Security Validation

|

Disclaimer: Automated Penetration Testing (APT) is one of the most practical use cases of Automated Security Validation. APT technologies act as the engine of ASV, representing the “assume breach” and “post breach” layer of security validation.

They focus on what happens after an attacker has gained a foothold (answering questions, such as “What if a particular employee clicks a phishing email?”), not on peripheral or pre-breach checks.

When making this comparison, it’s helpful to view Automated Penetration Testing as one of the power-house of Automated Security Validation, the component that drives its assume-breach capability and enables true adversarial testing at scale. |

Manual penetration testing was created as a craft, not a compliance task. Its purpose has always been to challenge assumptions, uncover logic flaws and think like an adversary in ways that cannot be templated. The strength of a pentest comes from depth and human creativity, which is why testers concentrate on narrow slices of an environment where that creativity makes the greatest impact.

Automated Security Validation was developed to address the rest of the attack surface.

As Volkan Ertürk, CTO of Picus Security, notes, “it was never designed to replace pentesters”.

Instead, ASV exists because large portions of modern environments do not require creative analysis at all.

This is where Automated Penetration Testing fits in. It applies automation to the parts of attacker behavior that do not rely on human intuition.

- It takes over the repetitive validation loops that add little value when performed manually.

- It maintains continuous coverage in the background, allowing human testers to focus on complex edge cases and logic flaws.

- It brings a level of consistency and chaining capability beyond junior or mid-level testers, automatically linking weaknesses in ways that resemble sophisticated adversary steps.

The outcome is not a substitute for human creativity, but a disciplined layer of automation that elevates where human effort is spent.

Key Differences

|

Category |

Manual Penetration Testing |

Automated Security Validation (ASV) Enabler Tech: Automated Penetration Testing (e.g., Picus APV) |

|

Coverage |

Rarely reaches beyond 10–15 percent of a domain. Manual inspection cannot scale to thousands of AD objects, accounts and trust relationships. |

Broad coverage across the full in-scope environment. Continuously tests attacker steps (enumeration, credential access, lateral movement, privilege escalation) and maps real attack paths. |

|

Power Houses, Key Enabler Technologies |

Human expertise, and customized tools & binaries, as it completely depends on personal experiences. |

Automated penetration testing (APT) solutions represent the “assume breach” and “post breach” layer of Automated Security Validation, focusing on what happens after an attacker is already inside rather than on peripheral testing. |

|

Frequency |

Performed annually or a few times per year due to cost, disruption and manual workload. Many assets are tested once a year or less. Produces a point-in-time snapshot that becomes outdated quickly. |

Runs continuously or on demand. Reduces test gaps from months to days. Any misconfiguration, policy change or permission issue is detected immediately instead of remaining hidden for a year. Provides a live, always-current view of exposure. |

|

Automation & Consistency |

Depends entirely on human expertise. Results vary by tester, even for the same tester. Not easily repeatable without re-engagement. Cost scales with frequency. |

Highly automated. Tests run consistently at any time. Provides repeatability, stable quality and fixed-cost validation. Supports frequent validation with minimal additional cost, and reduces the mundane tasks of in-house pentesters. |

|

Depth vs Breadth |

Excellent at deep, creative analysis: logic flaws, novel attack paths, complex chains. May uncover issues automation can never identify. |

Excels at breadth. Continuously detects known attack behaviors, common misconfigurations and exploitable weaknesses in a stealthy manner. Does not replace human creativity but covers the majority of sophisticated real-world attack vectors on a continuous basis. |

|

Value and Context |

Produces a list of findings but may not show how they interconnect. Prioritization is often left to the defender. Retesting requires separate engagement (no remediation validation). |

Provides contextual, validated risk. Shows which findings can be chained into real attack paths (to your domain admins). Automatically retests fixes for assurance. Helps teams focus on the issues that actually lead to compromise, and business disruption. |

|

Overall Role |

Deep, point-in-time expert analysis suited for complex scenarios, audits or regulatory needs. |

Continuous, scalable, automated assurance that monitors exposures daily and validates defenses against real attacker techniques. Complements human pentesting rather than replacing it. |

Vulnerability Scanning vs. Automated Security Validation

|

Disclaimer: Vulnerability Scanning and Automated Security Validation (ASV) both serve critical roles in identifying weaknesses, but they address different needs within an organization's security strategy. While vulnerability scanning focuses on discovery, ASV provides validation in context.

Many security validation vendors integrate with vulnerability scanning tools to enhance asset mapping, as discovery is the foundation of the validation process. Without it, the validation step would be ineffective. Therefore, these two practices aren't in competition; rather, they work together, with scanning feeding into validation, ensuring smarter, more comprehensive results. |

Vulnerability scanning provides a snapshot of potential issues across the environment, but it doesn’t validate whether these vulnerabilities can actually be exploited by attackers in the context of the organization's specific environment.

ASV, on the other hand, goes beyond discovery to validate vulnerabilities in the context of real-world attacks.

As Volkan Ertürk, CTO of Picus Security, explains, “True security validation depends on understanding your entire attack surface and identifying the vulnerabilities that matter most to your environment. That’s why we believe discovery is crucial, which is why Attack Surface Validation (ASV) is seamlessly integrated and available at no extra cost in our platform.”

While scanning identifies where vulnerabilities exist, ASV simulates adversarial tactics to demonstrate whether these vulnerabilities could actually be exploited by an attacker in the real environment.

Key Differences

|

Category |

Vulnerability Scanning |

Automated Security Validation (ASV) |

|

Purpose |

Identifies known issues such as missing patches, misconfigurations or outdated software via signatures and configuration checks. |

Validates whether vulnerabilities and exposures can actually be exploited in the real environment using safe adversarial testing. |

|

Detection vs Validation |

Detects potential vulnerabilities but cannot confirm real exploitability or business impact. |

Attempts safe exploitation to validate impact. Shows whether controls (such as, NGFW, WAF, IPS, EDR) block attacks or whether vulnerabilities can be chained into real compromise. |

|

Context & Prioritization |

Treats vulnerabilities mostly in isolation, often resulting in long lists of theoretical risks. |

Adds adversarial context. Shows which issues can be weaponized, chained or abused to reach critical assets. Converts raw findings into validated, prioritized exposures. |

|

False Positives / Noise |

Generates large volumes of alerts; can overwhelm teams with false positives or minor issues. “Up to 40% of scanner alarms are false positives.” |

Cuts through noise by discarding non-exploitable issues. Focuses only on findings that lead to meaningful attack paths. Reduces wasted effort and security-IT friction. |

|

Volume Challenge (40K+ CVEs/year) |

CVSS/EPSS may label 61% of CVEs as critical without considering environmental exploitability. Causes alert fatigue and misprioritization. |

Validates real-world risk. Highlights which CVEs matter in your environment and which do not. Prevents teams from ignoring real threats buried under theoretical ones. |

|

Frequency & Timeliness |

Typically runs weekly or monthly. Can miss issues emerging between scans. Some environments limit scan frequency due to system impact. |

Continuous or near real-time. Detects new exposures instantly. Integrates threat intel to test new exploits as they emerge, without waiting for scanner plugin updates. |

|

Remediation Guidance |

Often provides generic advice (“apply vendor patch”). Limited environment-specific guidance. |

Provides actionable, tailored mitigation steps (e.g., specific firewall rules, compensating controls, disabling vulnerable protocols). Can integrate with SOAR/patch tools for automated remediation. |

|

Depth vs Breadth |

Broad coverage but shallow understanding; identifies issues but cannot confirm exploit chains or post-compromise impact. |

Broad + deep. Simulates attacker behavior to show how vulnerabilities chain into lateral movement or privileged access. Focuses on crown-jewel impact. |

|

Integration Role |

Works as a discovery tool. Generates input data. |

Uses scanner findings as inputs and then validates them. Confirms whether “critical” scanner findings are actually exploitable—or if “medium” findings can lead to full domain compromise. |

|

Outcome |

Produces a list of potential issues; remediation is often manual and slow. |

Produces validated, prioritized exposures and confirms that fixes work through automated retesting. Strengthens exposure management end-to-end. |

Red Teaming vs. Automated Security Validation

|

Disclaimer: Breach and Attack Simulation (BAS) is the most effective approach for automating continuous red teaming to assess prevention and detection layers within Automated Security Validation. By simulating the full attack kill chain – from initial access (via CVE exploitation) to impact (e.g., ransomware) – BAS delivers a real-time, automated view of an organization’s defenses. It utilizes observed TTPs (tactics, techniques, and procedures) from both known and emerging attack campaigns, ensuring that defenses are continuously validated against the latest and most relevant threats. |

Red teaming is a resource-intensive, full-scope simulation conducted by humans to emulate real attacker tactics, typically on an annual basis. It tests detection and response using stealth techniques but is limited by its infrequency.

Automated Security Validation complements red teaming by offering continuous simulations of a wide range of attack techniques, such as discovery, privilege escalation, credential access, and ransomware campaigns. ASV runs continuously, validating controls against known adversary TTPs from the wild, including zero-days and PoCs.

While ASV lacks the creativity of red teams, it is more cost-effective, scalable, and provides ongoing validation of security controls. Many organizations use both: ASV for regular testing and red teams for high-level evaluations and novel attack paths. Leading ASV solutions are now marketed as offering “automated red teaming,” delivering similar capabilities with unmatched frequency and scale.

Key Differences

|

Category |

Breach and Attack Simulation (BAS) |

Red Teaming |

|

Coverage |

Provides continuous, broad coverage of the entire attack surface—on-premises, cloud, and hybrid environments—validating defenses in real-time. |

Focuses on highly targeted, complex attack scenarios using human-driven tactics to assess specific areas or weaknesses in the environment. |

|

Frequency |

Ongoing and automated, providing continuous testing and monitoring, helping to identify weaknesses as they arise. |

Periodic, typically conducted a few times per year, due to the intensive nature and high resource cost. |

|

Execution Style |

Automated, non-intrusive testing within production environments, simulating common attack techniques (e.g., phishing, ransomware, CVE exploitation). |

Manual, intrusive testing, involving human creativity and intuition to simulate advanced, novel attack scenarios. |

|

Key Enabler Technologies |

Automated tools that simulate attack scenarios, such as Picus BAS, to run pre-configured attack techniques. |

Relies heavily on human expertise, custom tools, and creative attack techniques, including social engineering, physical penetration, and advanced network manipulation. |

|

Automation & Consistency |

Highly automated and repeatable, ensuring consistent quality and efficiency in identifying vulnerabilities. Provides fixed-cost, scalable testing. |

Human-dependent with results varying depending on the tester's skills and creativity. Not easily repeatable without re-engagement. |

|

Depth vs Breadth |

Excels at breadth, simulating a wide range of attacks, verifying detection, and assessing control effectiveness across the attack surface. |

Known for depth, focusing on advanced, complex attack paths that require human expertise to identify vulnerabilities that are difficult to automate. |

|

Value and Context |

Provides real-time, contextual risk validation, showing how vulnerabilities can be exploited and chained to create attack paths. Ensures remediation validation. |

Delivers in-depth analysis of security posture but may not always validate how findings connect across the attack surface. Typically requires separate follow-up for remediation validation. |

|

Overall Role |

Continuous security assurance that monitors and validates security defenses, helping organizations maintain an up-to-date security posture. Complements other testing methods. |

Comprehensive, human-driven analysis, aimed at uncovering hidden or novel attack vectors and testing how well the organization’s defenses respond under real-world conditions. |

In summary, Automated Security Validation differs from traditional methods by offering:

- Continuous and high-frequency testing instead of point-in-time audits.

- Broad, automated coverage of the attack surface instead of narrow, manual focus.

- Adversary-perspective validation of real risk (showing what an attacker could do) rather than just listing theoretical issues.

- Greater scalability and consistency, freeing up human experts for more advanced or creative security improvements.

- Faster remediation cycles, as issues are found and fixed in near-real-time, and fixes are verified automatically.

This doesn’t make traditional approaches obsolete, rather, ASV is complementary and in many ways an evolution. Organizations still leverage manual pen tests and red teams for deep dives and compliance needs, but they increasingly rely on ASV to continuously guard the gate and keep the security posture robust day-to-day.

In fact, industry experts suggest incorporating ASV into a holistic program.

|

Use it to cover 95% of routine validation, and reserve human-led efforts for the remaining tricky 5%. This leads to a stronger overall security stance and more efficient use of resources. |

Which Automated Security Validation Tool You Need: BAS vs. Automated Pentesting, or Both?

Picus integrates both Breach and Attack Simulation and Automated Pentesting (APT) capabilities within its platform, each playing a significant and widely adopted role.

Below is a detailed key difference between BAS and APT capabilities.

Key Differences Between BAS and Automated Pentesting

Deployment and Testing Approach

- BAS: Uses an agent-based approach for continuous, non-intrusive testing. It's deployed to simulate attacks in production environments without disrupting operations.

- APT: Uses an agentless, stager-based approach. It tests systems with a focus on deeper, more intrusive testing, often simulating real-world vulnerabilities.

Security Coverage

- BAS: Ideal for continuous security validation, detecting configuration drift, and providing ongoing threat readiness. It integrates well with other security tools like SIEM, EDR, and NGFW.

- APT: Focuses on deeper vulnerability validation and attack path analysis, offering insights into exploitable risks within an infrastructure.

Use Cases

- BAS: Best for continuous baselining, identifying security control gaps, validating detection visibility, and assessing the efficacy of security controls (e.g., SIEM/EDR rules).

- APT: Suited for identifying new vulnerabilities, simulating real-world exploitation, and assessing the impact of vulnerabilities in attack paths.

Integration with Existing Security Systems

- BAS: Has broad integration capabilities with security tools and provides real-time detection of gaps in detection systems, alerting teams immediately.

- APT: While it doesn’t integrate with tools like SIEM, it validates vulnerabilities and attack paths, providing actionable findings for teams.

Complementary Roles

- BAS: Complements manual penetration tests and ongoing Red Team efforts by automating regular validation of known threats and TTPs.

- APT: Complements BAS by offering a deeper dive into exploitation, showing the real impact of vulnerabilities on the organization’s attack surface.

Recommendation for Organizations

- BAS is ideal for organizations looking for continuous, proactive security validation with real-time detection of gaps, useful for improving threat response and ensuring compliance.

- APT is best for organizations that need in-depth vulnerability assessment and attack path validation for a deeper understanding of their security posture.

Strategic Approach

- BAS First: Start by deploying BAS to establish a baseline understanding and continuously validate security posture.

- Follow Up with APT: After establishing a baseline, use APT to validate specific attack paths and vulnerabilities, integrating the findings into your vulnerability management processes.

The combination of both BAS and APT in a security program provides a comprehensive, proactive approach to threat detection, vulnerability management, and risk reduction, each addressing specific needs within the security lifecycle.

Data-backed Evidence of Benefits of Automated Security Validation (ASV)

Automated Security Validation is designed to maximize ROI and minimize risk by providing contextualized, real-world evidence of vulnerabilities. Picus' platform delivers tangible, data-backed improvements, enabling organizations to make smarter, more efficient security decisions.

The comparison below highlights the stark contrast between traditional CVSS-based methods (the baseline for most organizations that haven't yet implemented exposure validation) and Exposure Validation with Picus. These metrics show how Picus enhances the speed, accuracy, and effectiveness of vulnerability management:

|

KPI |

Baseline (CVSS) |

Exposure Validation with Picus Security |

|

Backlog |

9,500 findings |

1,350 findings |

|

MTTR |

45 days |

13 days |

|

Rollbacks |

11 per Quarter |

2 per Quarter |

Key Findings

- Backlog Reduction: By leveraging Picus' Exposure Validation, organizations reduced their vulnerability backlog from 9,500 findings (using traditional CVSS-based scanning) to just 1,350 findings. This significant reduction highlights the efficiency of ASV in filtering out non-exploitable vulnerabilities, enabling teams to focus on high-risk areas.

- Faster Remediation: The mean time to remediation (MTTR) improved dramatically, decreasing from 45 days to just 13 days. This accelerated remediation process directly correlates with Picus' ability to validate vulnerabilities in real-time, ensuring faster response times and fewer delays in addressing critical security risks.

- Reduced Rollbacks: The frequency of rollback incidents saw a dramatic drop, from 11 per quarter to just 2 per quarter. By providing more accurate, actionable insights, Picus minimizes false positives and reduces the need for remediation adjustments, enhancing overall operational efficiency.

Leading Automated Security Validation Tools and Platforms

Modern Automated Security Validation (ASV) solutions are rapidly evolving. While many vendors specialize in either Breach and Attack Simulation (BAS) or Automated Penetration Testing, some, like Picus, offer both capabilities in a unified platform.

A key trend in ASV is Exposure Validation, which integrates offensive simulations, vulnerability context, and control-effectiveness assessments into one solution. Picus’s platform combines BAS, automated pentests, and attack-path validation as part of its Exposure Validation capabilities.

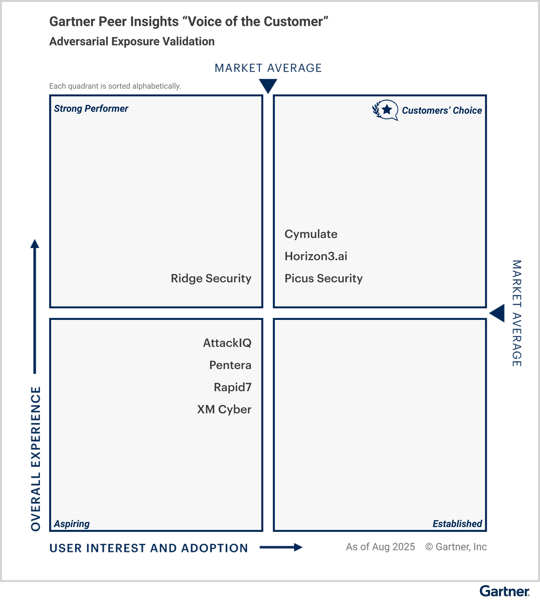

Figure 2. 2025 Gartner Peer Insights™ "Voice of the Customer for Adversarial Exposure Validation, October 30, 2025.

We are excited to announce that Picus Security has been recognized as a Customers’ Choice in the 2025 Gartner Peer Insights™ "Voice of the Customer for Adversarial Exposure Validation" report, released on October 30, 2025.

- Picus joins Cymulate and Horizon 3.ai in the Customers' Choice quadrant.

- Vendors in this quadrant are listed alphabetically, and no ranking is implied.

- Vendors placed in the upper-right “Customers’ Choice” quadrant have met or exceeded the market average for both User Interest and Adoption and Overall Experience.

At Picus, our success is defined by the results we deliver for our customers every day. According to the Gartner report, based on 71 reviews as of 31 August 2025, Picus Security was recognized by our peers:

- 98% Willingness to Recommend: The highest percentage among all vendors listed in the report.

- 4.8 out of 5 Overall Rating: The highest overall rating (tied) ) listed in the report.

- Customer Satisfaction: 80% of reviewers gave Picus Security the 5-star rating.

Enterprise Use Cases for Automated Security Validation

Continuous Validation in Hybrid Cloud Environments

In hybrid cloud environments, combining on-prem, private, and public clouds, ASV ensures no security gaps between domains. Picus simulates attack paths across on-prem and cloud, uncovering misconfigurations like permissive cloud IAM roles or improper network connectivity. For instance, ASV might detect an Active Directory sync issue allowing on-prem accounts access to Azure, exposing a potential attack path.

ASV (via Cloud Security Validation) also validates cloud misconfigurations, such as attempting data exfiltration from an improperly configured S3 bucket. It ensures cloud workload protections, like IDS/IPS, detect malicious activity in cloud VMs or containers.

Additionally, ASV continuously verifies network segmentation, simulating lateral movement across on-prem, cloud, and OT environments. It catches misconfigurations, such as open firewall rules, that routine audits may miss.

Overall, ASV provides continuous, proactive audits from an attacker’s perspective, ensuring security controls adapt as cloud services evolve. For example, ASV helped a financial institution discover an unsecured connection between a developer’s cloud environment and the corporate network, preventing potential attacks.

Enhancing Large-Scale SOC Operations and Incident Response

For Security Operations Centers (SOCs), Automated Security Validation boosts detection, reduces alert fatigue, and streamlines incident response.

- Detection Rule Validation: ASV simulates attacks to verify SIEM and EDR systems trigger proper alerts. Picus’ Detection Rule Validation ensures security controls are working, flagging missing alerts for new rule creation.

- Training and Drills: ASV enables SOC drills, simulating attacks like ransomware to help teams practice detection and response, improving key performance metrics like mean time to detect lateral movement.

- Reducing Noise: ASV filters out non-exploitable events, letting the SOC prioritize high-impact threats and improve response efficiency.

- Incident Response Validation: After an incident, ASV runs simulations to ensure patches and signatures are effective, automating regression testing.

- SOAR Integration: ASV integrates with SOAR platforms to automate responses. If an open port is found, SOAR can isolate the host, minimizing exposure.

ASV acts as an automated QA system, continuously validating security and enhancing SOC efficiency.

Continuous Compliance and Audit Readiness

Enterprises in regulated industries (finance, healthcare, utilities…) can use ASV to maintain continuous compliance with security testing requirements. Many regulations and standards (PCI DSS, NIST CSF, ISO 27001, GDPR, DORA, etc.) mandate regular security control testing and proof of effectiveness. Instead of scrambling before an audit to gather evidence of annual pentests and such, organizations can leverage ASV reports which continuously demonstrate compliance.

For example, PCI DSS requires firewall rule reviews and periodic network segmentation tests; an ASV platform can generate reports showing that segmentation is tested daily by simulating traffic between isolated network zones and confirming no leakage. This serves as evidence that “in-scope systems are properly segmented,” a PCI auditor’s concern.

Similarly, for cyber insurance or board reporting, ASV provides actionable, data-driven metrics that offer unparalleled visibility into your security performance.

|

For instance, leveraging Picus Platform, one of our customer could say: "This quarter, we executed 5,000 attack simulations, achieving an 80% block rate. Of the 20% attacks that breached defenses, only 5% were classified as critical, while the remaining were medium or low priority. The critical vulnerabilities were remediated in less than 24 hours, fully aligned with our SLA and playbook, while the non-critical issues are being strategically addressed to optimize resource allocation." |

These highly specific, real-time metrics were once nearly impossible to gather manually—with ASV, they are automatically captured, offering a clear, actionable overview that drives smarter decision-making and demonstrates measurable risk reduction.

Gartner’s CTEM framework explicitly ties into this, continuous validation (ASV) combined with continuous assessment yields strong documentation of an organization’s security posture over time.

Enterprises can show auditors a dashboard of compliance-related attack scenarios (like tests for SOX IT controls or HIPAA safeguards) and their outcomes. Because ASV tools often map to controls and frameworks, it’s easy to see, for example, “All NIST CSF Category PR.PT (Protective Technology) controls have been validated via 20 different simulations, with results X, Y, Z.”

This not only satisfies auditors but also builds executive confidence in the security program.

Threat Exposure Management and Risk Reduction with Picus Platform

Organizations, from SMBs to large enterprises, are increasingly integrating automated security validation into their Continuous Threat Exposure Management (CTEM) programs to better assess and mitigate cyber risk.

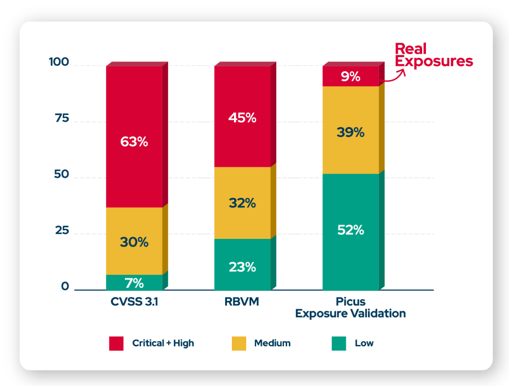

Through data analysis from the Picus Platform, we've identified a significant gap between traditional security assessment methods and real-world exposure risks.

The following illustrates this discrepancy:

- CVSS 3.1 classifies 63% of vulnerabilities as high or critical. However, many of these do not reflect actual exploitable risks in the real world.

- Risk-Based Vulnerability Management (RBVM) flags 45% as high-risk, but not all of these vulnerabilities are exploitable in a live environment.

In contrast, Picus Exposure Validation offers a more accurate, context-driven view of vulnerabilities. Our simulations show that only 9% of vulnerabilities labeled as high or critical are actual exploitable exposures that pose a risk.

While the remaining vulnerabilities are important, they do not present the same immediate threats and can be deprioritized, allowing security teams to focus resources where they are truly needed.

This data demonstrates how Picus Exposure Validation provides actionable insights by focusing on real exposures instead of relying solely on broad vulnerability categories. By validating vulnerabilities in context, organizations can minimize unnecessary distractions, reduce remediation backlogs, and optimize their defense strategies.

Ready to enhance your organization's security posture? See how Picus continuously validates your defenses, identifies exploitable vulnerabilities, and helps streamline risk management. Schedule your demo today.

TL;DR: The Ultimate Guide to Automated Security Validation (ASV) in 2025

- What is ASV: Automated Security Validation (ASV) uses automated tools to continuously test security defenses with real-world attack simulations, ensuring up-to-date security posture and prioritization of high-risk threats.

- How ASV Works: ASV simulates adversary techniques to identify exploitable vulnerabilities in real-time, unlike traditional static methods. It adapts to changes in the environment and provides actionable insights, showing which vulnerabilities are exploitable and which defenses are working.

- Key Components of ASV:

- Adversarial Exposure Validation (AEV) includes Breach and Attack Simulation (BAS) and Automated Penetration Testing (APT) to validate vulnerabilities continuously.

- BAS simulates real-world attack tactics, while APT automates penetration testing to chain vulnerabilities and reveal attack paths.

- BAS in Action: BAS helps prioritize remediation by assessing the actual exploitability of vulnerabilities, such as with the Log4j case, where the severity score dropped based on context, reducing unnecessary urgency.

- Log4j Example: CVSS Score: 10.0 → EPSS: 9.1 → Asset Criticality: 7.2 → Control Effectiveness: 5.3.

- This dynamic scoring allowed teams to deprioritize remediation efforts on non-exploitable instances.

- Continuous Validation: ASV constantly checks security controls against evolving threats, offering a dynamic, up-to-date view of an organization’s security posture, ensuring no gaps between periodic tests.

- ASV vs Traditional Security Testing: Unlike one-time methods like manual pentesting and vulnerability scanning, ASV runs continuously, offering real-time validation with greater coverage and consistency. It validates vulnerabilities in context, saving time and resources by deprioritizing non-exploitable risks.

- Key Benefits

- Reduced Backlogs: By focusing only on exploitable vulnerabilities, ASV dramatically reduces the vulnerability backlog.

- Data: From 9,500 findings (CVSS) to 1,350 findings with Picus Exposure Validation.

- Faster Remediation: ASV accelerates mean time to remediation (MTTR) and minimizes rollbacks by providing more accurate insights.

- Data: MTTR improved from 45 days (CVSS) to 13 days with Picus Exposure Validation.

- Reduced Rollbacks: Fewer remediation mistakes lead to 2 rollbacks per quarter, down from 11 rollbacks per quarter.

- Reduced Backlogs: By focusing only on exploitable vulnerabilities, ASV dramatically reduces the vulnerability backlog.

- BAS vs APT: BAS is best for continuous security validation and real-time detection of gaps, while APT is suited for deeper security vulnerability analysis and attack path validation.

- Real-World Application: ASV is critical for hybrid cloud environments, enhancing SOC operations, and ensuring continuous compliance with regulations like PCI DSS and NIST. It integrates into existing security tools, offering proactive threat management and streamlined risk reduction.

- Picus Platform: Picus integrates both BAS and APT in one platform, recognized as a Gartner Customers’ Choice for Adversarial Exposure Validation in the 2025 Gartner Peer Insights™ "Voice of the Customer for Adversarial Exposure Validation" report, released on October 30, 2025.

- Customer Feedback :

- 98% Willingness to Recommend

- 4.8/5 Overall Rating

- 80% of reviewers gave 5-star ratings

- Picus provides a unified approach to continuously validate security defenses, reduce vulnerabilities, and optimize risk management.

- Customer Feedback :

- Ready to start testing and strengthening your entire security stack (perimeter defenses, endpoints, cloud, and Windows AD, and so on...) against both known and emerging threats? Schedule your demo today.

.png?width=353&height=200&name=Ni8mare-ET-preview-oct25%20(1).png)